The following guest post is by John Wallin, the Director of the Computational and Data Science Ph.D. Program and Professor of Physics and Astronomy at Middle Tennessee State University

Since the 1960s, scientific software has undergone repeated innovation cycles in languages, hardware capabilities, and programming paradigms. We have gone from Fortran IV to C++ to Python. We moved from punch cards and video terminals to laptops and massively parallel computers with hundreds to millions of processors. Complex numerical and scientific libraries and the ability to immediately seek support for these libraries through web searches have unlocked new ways for us to do our jobs. Neural networks are commonly used to classify massive data sets in our field. All these changes have impacted the way we create software.

In the last year, large language models (LLM) have been created to respond to natural language questions. The underlying architecture of these models is complex, but the current generation is based on generative pre-trained transformers (GPT). In addition to the base architecture, they have recently incorporated supervised learning and reinforcement learning to improve their responses. These efforts resulted in a flexible artificial intelligence system that can help solve routine problems. Although the primary purpose of these large language models was to generate text, it became apparent that these models could also generate code. These models are in their infancy, but they have been very successful in helping programmers create code snippets that are useful in a wide range of applications. I wanted to focus on two applications of the transformer-based LLM – ChatGPT by OpenAI and GitHub Copilot.

ChatGPT is perhaps the most well-known and used LLM. The underlying GPT LLM was released about a year ago, but a newer interactive version was made publicly available in November 2022. The user base exceeded a million after five days and has grown to over 100 million. Unfortunately, most of the discussion about this model has been either dismissive or apocalyptic. Some scholars have posted something similar to this:

“I wanted to see what the fuss is about this new ChatGPT thing, so I gave a problem from my advanced quantum mechanics course. It got a few concepts right, but the math was completely wrong. The fact that it can’t do a simple quantum renormalization problem is astonishing, and I am not impressed. It isn’t a very good “artificial intelligence” if it makes these sorts of mistakes!”

The other response that comes from some academics:

“I gave ChatGPT an essay problem that I typically give my college class. It wrote a PERFECT essay! All the students are going to use this to write their essays! Higher education is done for! I am going to retire this spring and move to a survival cabin in Montana to escape the cities before the machine uprising occurs.”

Of course, neither view is entirely correct. My reaction to the first viewpoint is, “Have you met any real people?” It turns out that not every person you meet has advanced academic knowledge in your subdiscipline. ChatGPT was never designed to replace grad students. A future version of the software may be able to incorporate more profound domain-specific knowledge, but for now, think of the current generation of AIs as your cousin Alex. They took a bunch of college courses and got a solid B- in most of them. They are very employable as an administrative assistant, but you won’t see them publish any of their work in Nature in the next year or two. Hiring Alex will improve your workflow, even if they can’t do much physics.

Of course, neither view is entirely correct. My reaction to the first viewpoint is, “Have you met any real people?” It turns out that not every person you meet has advanced academic knowledge in your subdiscipline. ChatGPT was never designed to replace grad students. A future version of the software may be able to incorporate more profound domain-specific knowledge, but for now, think of the current generation of AIs as your cousin Alex. They took a bunch of college courses and got a solid B- in most of them. They are very employable as an administrative assistant, but you won’t see them publish any of their work in Nature in the next year or two. Hiring Alex will improve your workflow, even if they can’t do much physics.

The apocalyptic view also misses the mark, even if the survival cabin in Montana sounds nice. Higher education will need to adapt to these new technologies. We must move toward more formal proctored evaluations for many of our courses. Experiential and hands-on learning will need to be emphasized, and we will probably need to reconsider (yet again) what we expect students to take away from our classes. Jobs will change because of these technologies, and our educational system needs to adapt.

Despite these divergent and extreme views, generative AI is here to stay. Moreover, its capabilities will improve rapidly over the next few years. These changes are likely to include:

- Access to live web data and current events. Microsoft’s Bing (currently in limited release) already has this capability. Other engines are likely to become widely available in the next few months.

- Improved mathematical abilities via linking to other software systems like Wolfram Alpha. ChatGPT makes mathematical errors routinely because it is doing math via language processing. Connecting this to symbolic processing will be challenging, but there have already been a few preliminary attempts.

- Increased ability to analyze graphics and diagrams. Identifying images is already routine, so moving to understand and explaining diagrams is not an impossible extension. However, this type of future expansion would impact how the system analyzes physics problems.

- Accessing specialized datasets such as arXiv, ADS, and even astronomical data sets. It would be trivial to train GPT3.5 on these data sets and give it domain-specific knowledge.

- Integrating the ability to create and run software tools within the environment. We already have this capability in GitHub Copilot, but the ability to read online data and immediately do customized analysis on it is not out of reach for other implementations as well.

Even without these additions, writing code with GitHub Copilot is still a fantastic experience. Based on what you are working on, your comments, and its training data, it attempts to anticipate your next line or lines of code. Sometimes, it might try to write an entire function for you based on a comment or the name of the last function. I’ve been using this for about five months, and I find it particularly useful when using library functions that are a bit unfamiliar. For example, instead of googling how to add a window with a pulldown menu in python, you would write a comment explaining what you want to do, and the code will be created below your comment. It also works exceptionally well solving simple programming tasks such as creating a Mandelbrot set or downloading and processing data. I estimate that my coding speed for solving real-world problems using this interface has tripled.

However, two key issues need to be addressed when using the code: authorship and reliability.

When you create a code using an AI, it goes through millions of lines of public domain code to find matches to your current coding. It predicts what you might be trying to do based on what others have done. For simple tasks like creating a call to a known function in a python library, this is not likely to infringe on the intellectual property of someone’s code. However, when you ask it to create functions, it is likely to find other codes that accomplish the task you want to complete.  For example, there are perhaps thousands of examples of ODE integrators in open-source codes. Asking it to create such a routine for you will likely result in inadvertently using one of those codes without knowing its origin.

For example, there are perhaps thousands of examples of ODE integrators in open-source codes. Asking it to create such a routine for you will likely result in inadvertently using one of those codes without knowing its origin.

The only thing of value we produce in science is ideas. Using someone else’s thoughts or ideas without attribution can cross into plagiarism, even if that action is unintentional. Code reuse and online forums are regularly part of our programming process, but we have a higher level of awareness of what is and isn’t allowed when we are the ones googling the answer. Licensing and attribution become problematic even in a research setting. There may be problems claiming a code is our intellectual property if it uses a public code base. Major companies have banned ChatGPT from being used for this reason. At the very least, acknowledging that you used an AI to create the code seems like an appropriate response to this challenge. Only you can take responsibility for your code, but explaining how it was developed might help others understand its origin.

The second issue for the new generation of AI assistants is reliability. When I asked ChatGPT to write a short biographical sketch for “John Wallin, a professor at Middle Tennessee State University,” I found that I had received my Ph.D. from Emory University. I studied Civil War and Reconstruction era history. It confidently cited two books that I had authored about the Civil War. All of this was nonsense created by a computer creating text that it thought I wanted to read.

It is tempting to think that AI-generated code would produce correct results. However, I have regularly seen major and minor bugs within the code it has generated. Some of the mistakes can be subtle but could lead to erroneous results. Therefore, no matter how the code is generated, we must continue to use validation and verification to determine if we both have a code that correctly implements our algorithms and have the correct code to solve our scientific problem.

Both authorship and reliability will continue to be issues when we teach our students about software development in our fields. At the beginning of the semester, I had ChatGPT generate “five group coding challenges that would take about 30 minutes for graduate students in a Computational Science Capstone course.” When I gave them to my students, it took them about 30 minutes to complete. I created solutions for ALL of them using GitHub Copilot in under ten minutes. Specifying when students can and can’t use these tools is critical, along with developing appropriate metrics for evaluating their work when using these new tools. We also need to push students toward better practices in testing their software, including making testing data sets available when the code is distributed.

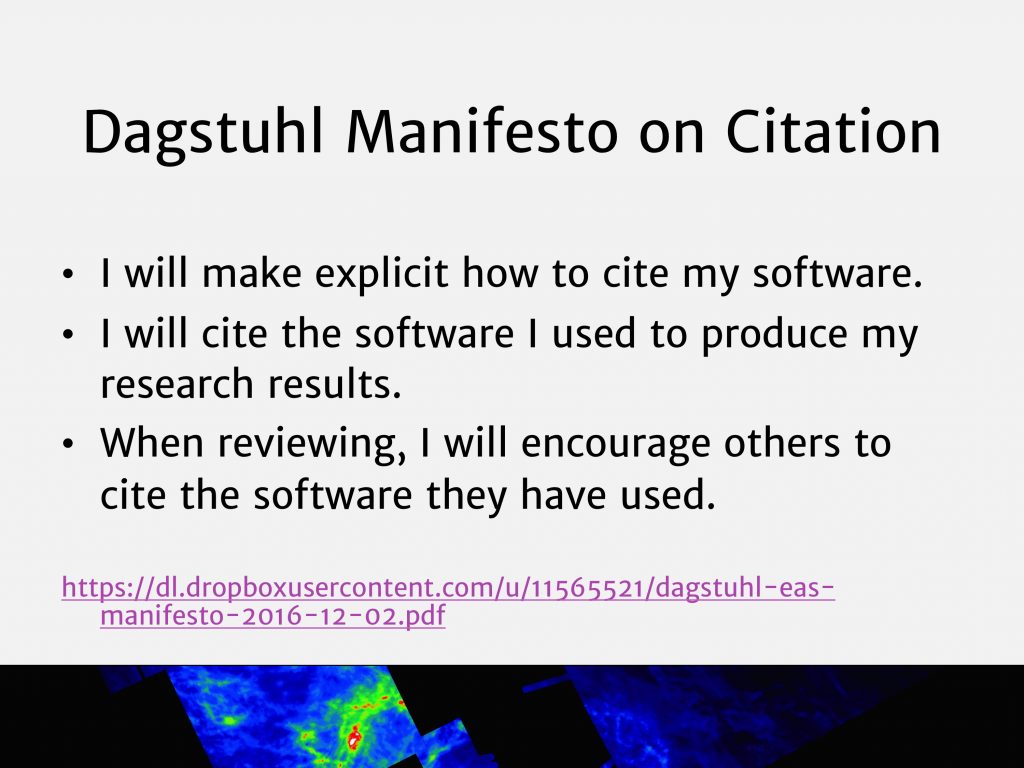

Sharing your software has never been more important, given these challenges. Although we can generate codes faster than ever, the reproducibility of our results matters. Your methodology’s only accurate description is the code you used to create the results. Publishing your code when you publish your results will increase the value of your work to others. As the abilities of artificial intelligence improve, the core issues of authorship and reliability still need to be verified by human intelligence.

Addendum: The Impact of GPT-4 on Coding and Domain-Specific Knowledge

Written with the help of GPT-4; added March 20, 2023

Since the publication of the original blog post, there have been significant advancements in the capabilities of AI-generated code with the introduction of GPT-4. This next-generation language model continues to build on the successes of its predecessors while addressing some of the limitations that were previously observed.

One of the areas where GPT-4 has shown promise is in its ability to better understand domain-specific knowledge. While it is true that GPT-4 doesn’t inherently have access to specialized online resources like arXiv, its advanced learning capabilities can be utilized to incorporate domain-specific knowledge more effectively when trained with a more specialized dataset.

Users can help GPT-4 better understand domain-specific knowledge by training it on a dataset that includes examples from specialized sources. For instance, if researchers collect a dataset of scientific papers, code snippets, or other relevant materials from their specific domain and train GPT-4 with that data, the AI-generated code would become more accurate and domain-specific. The responsibility lies with the users to curate and provide these specialized datasets to make the most of GPT-4’s advanced learning capabilities.

By tailoring GPT-4’s training data to be more suited to their specific needs and requirements, users can address the challenges of authorship and reliability more effectively. This, in turn, can lead to more efficient and accurate AI-generated code, which can be particularly valuable in specialized fields.

In addition to the advancements in domain-specific knowledge and coding capabilities, GPT-4 is also set to make strides in the realm of image analysis. Although not directly related to coding, these enhancements highlight the growing versatility of the AI engine. While the image analysis feature is not yet publicly available, it is expected to be released soon, allowing users to tap into a new array of functionalities. This expansion of GPT-4’s abilities will enable it to understand and interpret images, diagrams, and other visual data, which could have far-reaching implications for various industries and applications. As GPT-4 continues to evolve, it is crucial to recognize and adapt to the ever-expanding range of possibilities that these AI engines offer, ensuring that users can leverage their full potential in diverse fields.

With the rapid advancements in AI capabilities, it is essential for researchers, educators, and developers to stay informed and adapt to the changes that GPT-4 and future models bring. As AI-generated code becomes more accurate and domain-specific, the importance of understanding the potential benefits, limitations, and ethical considerations of using these tools will continue to grow.