![Poster title: How Important is Software to Astronomy? Poster text: Software is the most used instrument in astronomy All astronomers use software Robust research requires reproducibility and transparency Computational methods are methods, and should be easily discoverable and open to examination Releasing source code demonstrates confidence in your results and improves efficiency in the discipline Astrophysics Source Code Library (ASCL, ascl.net) • Is a free curated online registry and repository for astro research source codes • Has over 3400 entries • Is indexed by ADS and Web of Science • Includes all major codes that have enabled astro research • Makes it easy to find this software • Advocates for open source and FAIR practices • Is citable and citations to its entries are tracked by major indexers • Adds new and old codes monthly ASCL entries have been cited more than 16,000 times in over 240 journals How to use the ASCL Register your code with the ASCL to make it easier for others to find and to get an ASCL ID to use for citing the software Search for useful downloadable software Find preferred citation information for software you’ve used in research Introduce students to variety of methods available for solving common astronomical problems e community Provides a curated resource for software methods Links research articles with the software that enables that research; links are passed to ADS, so also appear in that resource Allows for citation to software on its own merits without the need to write a separate article for it References [1] Momcheva, I. & Tollerud, E., 2015. Software Use in Astronomy: an Informal Survey, doi:10.48550/arXiv.1507.03989 [2] ASCL dashboard, https://ascl.net/dashboard, retrieved 16 July 2024](https://ascl.net/wordpress/wp-content/uploads/2024/08/IAU2024ASCL_A1poster.jpg) Software is by far the most used instrument in astronomy, and as robust research requires reproducibility and transparency, computational methods should be easily discoverable and open to examination. The Astrophysics Source Code Library (ASCL, ascl.net) makes the software that drives our discipline discoverable. The ASCL is a free online registry and repository for astrophysics research software. Containing over 3300 entries, it not only includes all the major codes that have enabled astro science, thus making it easy to find this software, it also advocates for open source and FAIR practices, and enables trackable formal software citation. Its entries have been cited more than 16,000 times in over 200 journals, and are indexed by ADS and Web of Science. This presentation covers how to use the ASCL and how it benefits the community.

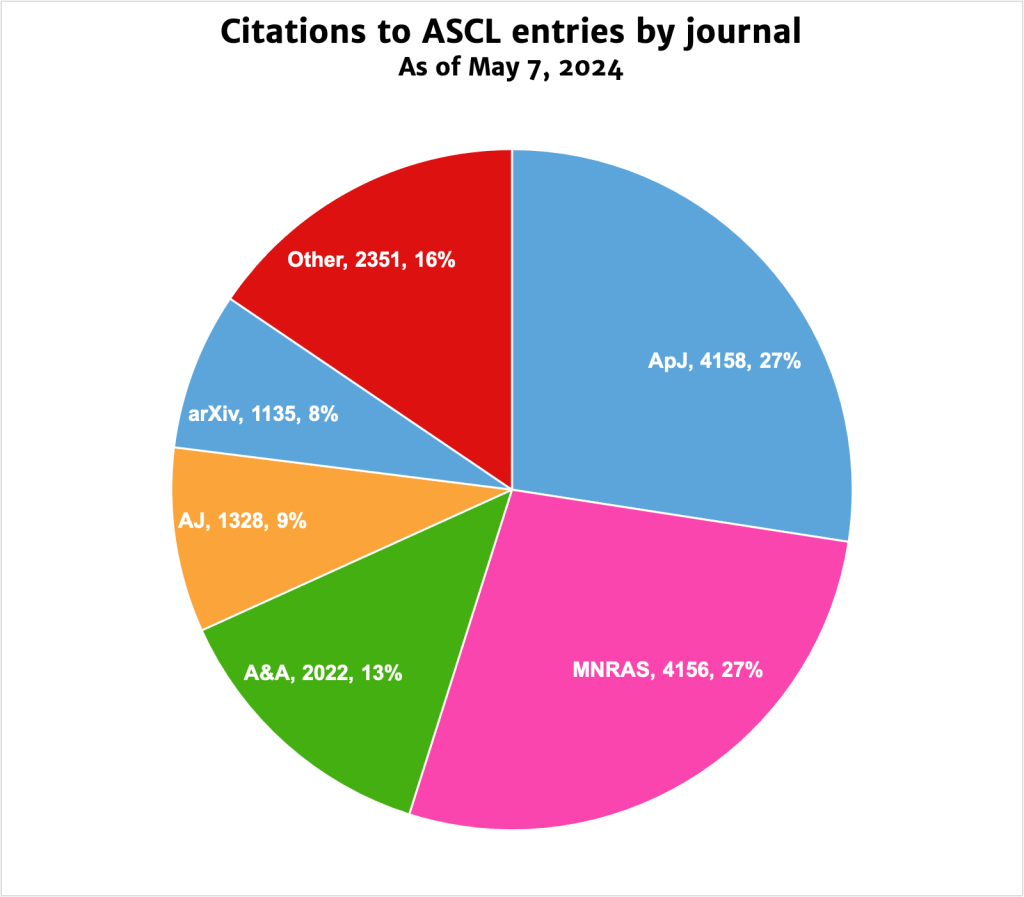

Software is by far the most used instrument in astronomy, and as robust research requires reproducibility and transparency, computational methods should be easily discoverable and open to examination. The Astrophysics Source Code Library (ASCL, ascl.net) makes the software that drives our discipline discoverable. The ASCL is a free online registry and repository for astrophysics research software. Containing over 3300 entries, it not only includes all the major codes that have enabled astro science, thus making it easy to find this software, it also advocates for open source and FAIR practices, and enables trackable formal software citation. Its entries have been cited more than 16,000 times in over 200 journals, and are indexed by ADS and Web of Science. This presentation covers how to use the ASCL and how it benefits the community.

Alice Allen, Astrophysics Source Code Library/University of Maryland, MD, USA/Michigan Technological University, MI, USA